You're rewriting a blog post to appeal to technical readers by changing capitalization, using em dashes, and correcting grammar. You're also contemplating a deeper change in your writing style to better reflect your thoughts and engagement with the world.

The IPv4 address market has devolved into a wild sub-leasing economy where companies can choose their IP geolocation, scrub history, and rent residential IPs to make traffic appear legitimate. This infrastructure undermines the trust systems the internet was built on, making spam blacklists, geolocation databases, and IP reputation scoring unreliable.

Something went wrong, but don’t fret — let’s give it another shot. Some privacy related extensions may cause issues on x.com. Please disable them and try again.

Moongate v2 is a modern Ultima Online server project built with .NET 10, aiming for a clean, modular architecture with strong packet tooling and deterministic game-loop processing. The project is open to contributors and welcomes pull requests with changes, following the project's coding standards and including tests, and is licensed under the GNU General Public License v3.0.

Open Camera is a free, open-source Android camera app with manual controls and features like geotagging and RAW file support. It requires Android 5.0 or later and uses Google's Material Design icons under Apache license.

Math Notepad is a web based editor to do mathematical calculations and plot graphs. It supports real and complex numbers, matrices, and units.

Sam and Michael from Palus Finance are building a treasury management platform for startups to earn higher yields with a high-yield bond portfolio. Their platform targets 4.5-5% returns vs 3.5% from money market funds with a flat 0.25% annual fee.

Open in Google Maps ↗ 📍 This address is verbatim from the California Public Utilities Commission. The pin on the map is a best guess. It might be a little off. Or phone might not even be there anymore. The first person to call from a payphone can leave a voicemail that gets posted here.

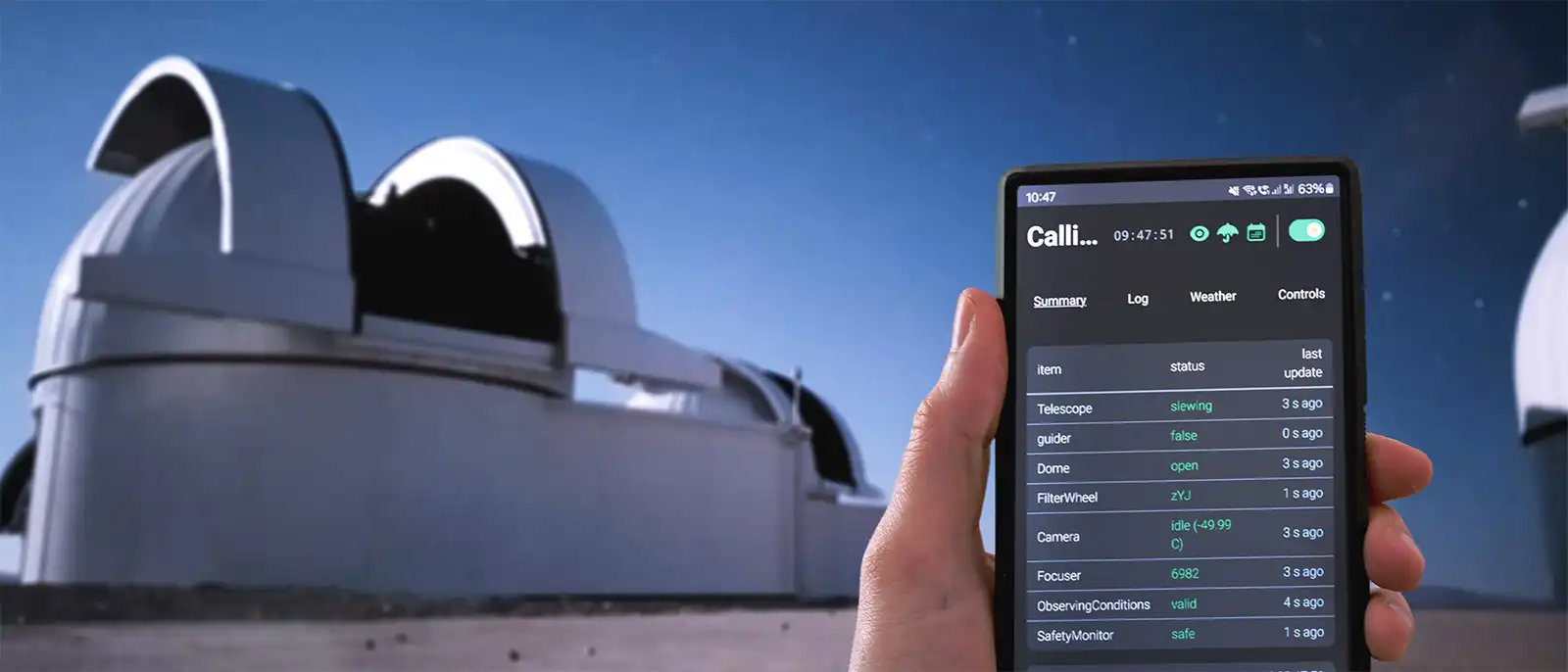

Astra (Automated Survey observaTory Robotised with Alpaca) is an open-source observatory control software for automating and managing robotic observatories. It integrates seamlessly with ASCOM Alpaca for hardware control. Web Interface — Manage your observatory from any browser, use cloudflared or similar to access outside your network

OBLITERATUS is an open-source toolkit for removing refusal behaviors from large language models, making them respond to all prompts without artificial gatekeeping. It implements abliteration techniques, allowing users to contribute to a crowd-sourced dataset that powers the next generation of abliteration research.

We're hiring an Engineering Lead to own technical execution, shape engineering culture, and define development practices for a world-class engineering org. The ideal candidate has 8+ years of software engineering experience, strong systems-level building skills, and experience designing and shipping backend systems.

This program is distributed under the GNU General Public License Version 2 | WebSite Designed With ❤️

The user discusses a technique for rendering fog with varying density using the Beer-Lambert Law and ray-marching, and provides examples of radial functions and their antiderivatives to calculate the density along a given ray. The technique can be used to create complex fog or smoke-like effects and can be combined with particle systems or boolean operations to achieve dynamic or animated fog effects.

Several hosting providers allow Tor exit nodes with reduced exit policies, including Linode, MaxKO Hosting, and r0cket.cloud, while others prohibit them or have strict policies against abuse. Some providers, such as Ultra.cc and Hostinger, explicitly forbid Tor relays and exit nodes, while others, like Free Speech Host and Gigahost, allow Tor relays but not exit nodes.

Ignoring the midterm hysteria, we continue our obsession with SoftBank today by looking at the group’s IPO of its telecom unit. But first, some thoughts about Form Ds. Recently, I was looking up the investment history of Patreon (Note: I was an investor in the company through my previous venture firm CRV). I did what I normally do: I went straight to the SEC’s EDGAR system and started ...

TypeScript 6.0 is a transition release preparing developers for TypeScript 7.0, with breaking changes and deprecations to align with the evolving JavaScript ecosystem. It introduces new features and improvements, including support for the es2025 target, Temporal API types, and upsert methods on Map and WeakMap.

Recent analysis found that global warming has accelerated after 2015, exceeding previous 10-year periods. Adjusted data account for natural variability factors like El Niño, volcanism, and solar variation.