A study on LLM-assisted essay writing found that users exhibited weaker brain connectivity and lower cognitive activity when using LLMs. Long-term reliance on LLMs may have negative educational implications, including underperformance and decreased neural engagement.

Sweep Next-Edit predicts your next code edit before you make it. It runs locally on your laptop in under 500ms (with speculative decoding) and outperforms models over 4x its size on next-edit benchmarks. More details here. The model uses a specific prompt format with file context, recent diffs, and current state to predict the next edit. See run_model.py for a complete example.

Skip is now completely free and open source, allowing developers to create native cross-platform apps without compromises. The platform's future is sustained through sponsorships and community support, ensuring its long-term success.

Google's monopoly on search has stifled innovation and access to information, prompting a US court to rule against Google and order open index access. The ruling aims to create a more competitive search market, enabling companies like Kagi to provide ad-free search experiences and promote a non-commercial baseline for information access.

Bending Spoons acquired Vimeo in a $1.38B deal, following a pattern of layoffs and cost-cutting after previous acquisitions. This model may aim to run acquired products at a profit before breaking them down, but it's unclear where the money comes from and how to sustain growth.

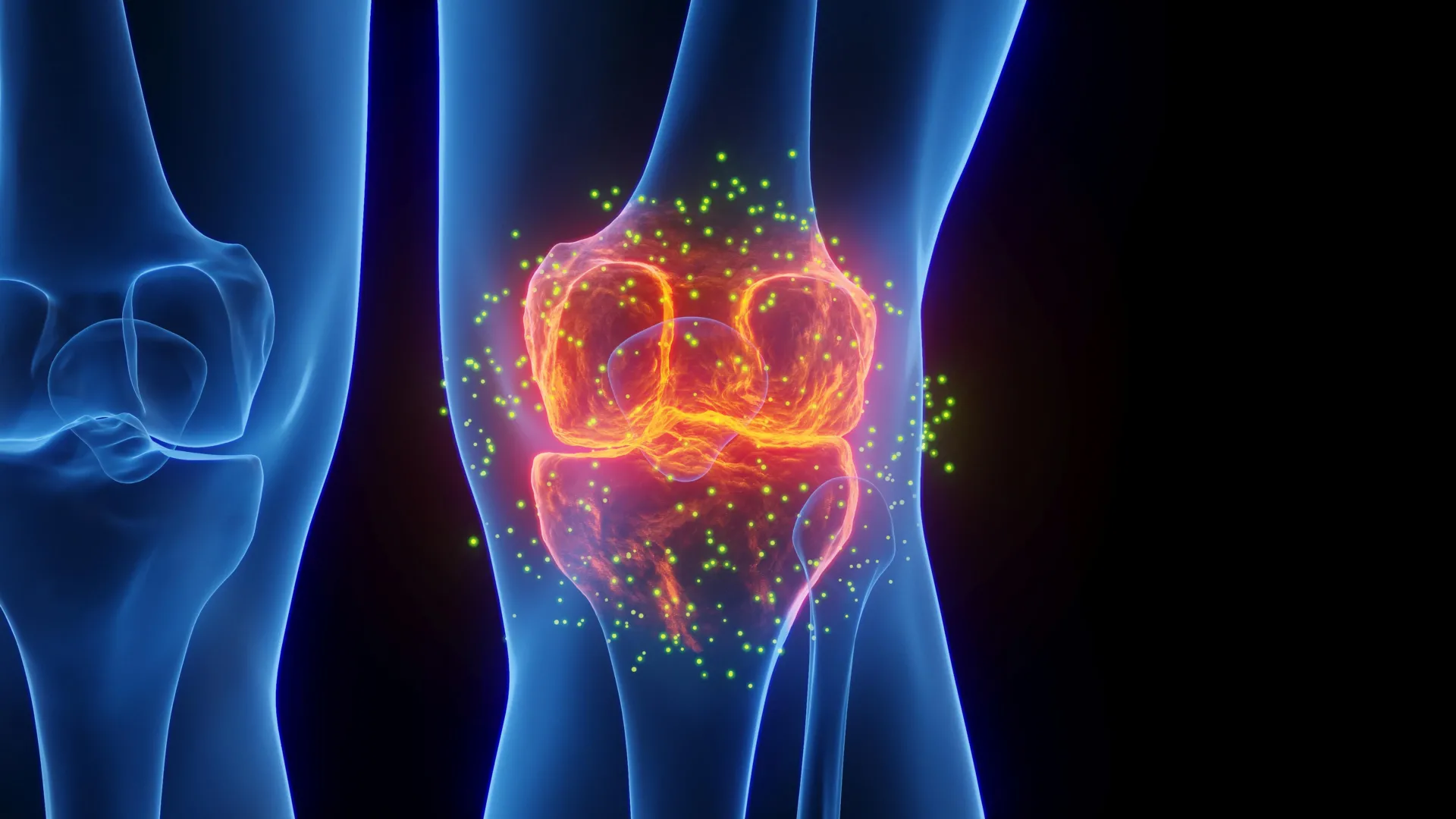

Scientists at Stanford Medicine discovered a treatment that reverses cartilage loss in aging joints and prevents arthritis after knee injuries by blocking a protein linked to aging. The treatment restored healthy cartilage in old mice and human cartilage samples, improving movement and joint function.

Civic institutions like universities and free press are crucial for democratic life, but AI systems are degrading them by eroding expertise and isolating people. Current AI systems are a death sentence for civic institutions due to their lack of transparency, cooperation, and accountability.

Two SETI@home papers will be published in The Astronomical Journal, detailing data acquisition and analysis. A website outage has been resolved after multiple disk failure.

Corey Dumm, a spiky-haired nerd, lives in a world where Markdown rules vary. He faces problems with triple backticks in fenced code blocks and inline code spans.

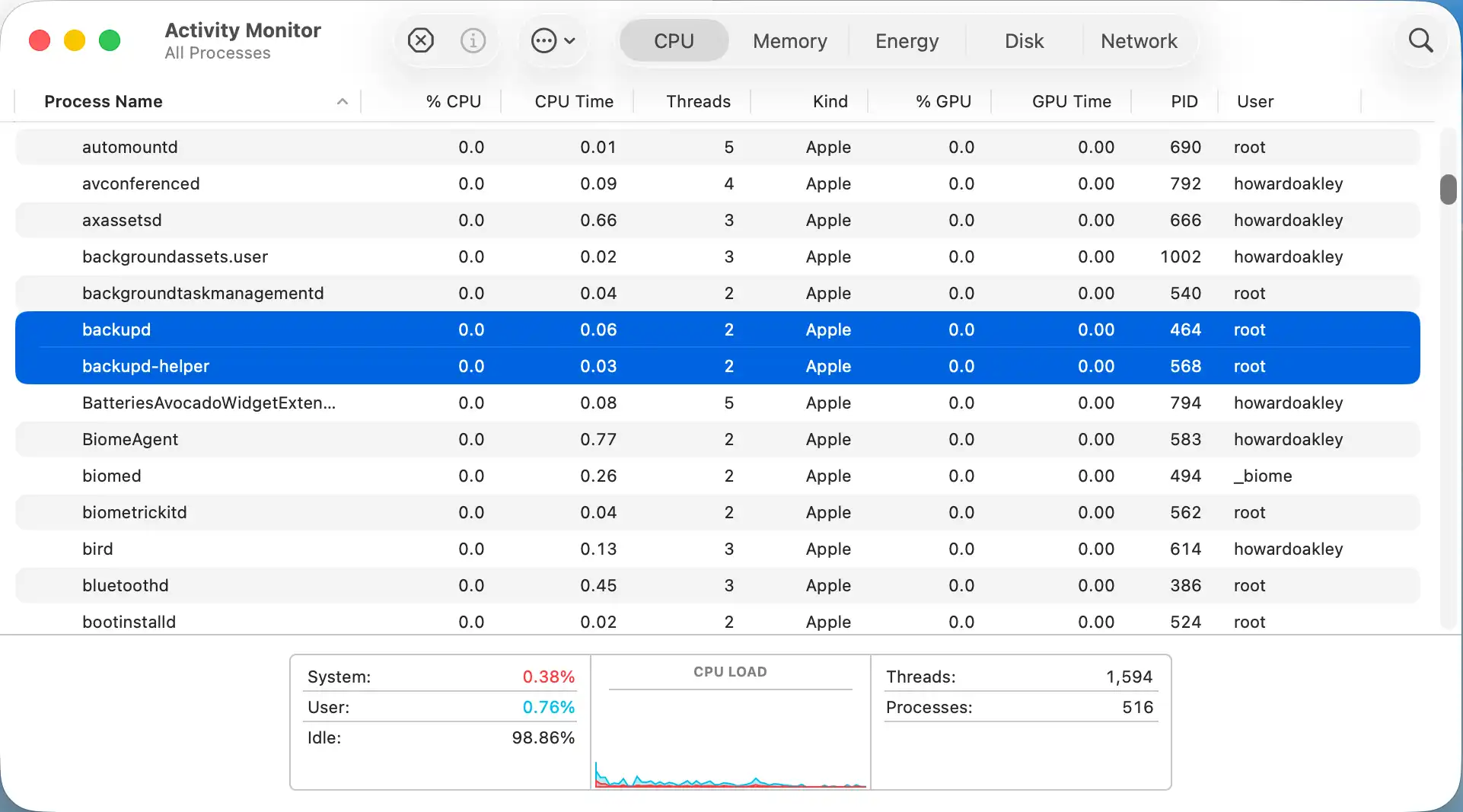

The user discusses the numerous processes running on a Mac, including those related to Time Machine, and explores ways to disable them due to their resource usage. However, due to the Signed System Volume (SSV) and Duet Activity Scheduler (DAS), it's not possible to stop or disable these processes, making it difficult to pare down the Mac's processes.

This webpage displays a JPEG XL image, currently only viewable in Safari, and discusses its history and re-implementation in Chrome. The website was built with blag and has contact information via Mastodon and Codeberg.

A quiet revolution in AI agents occurred during the 2025 winter holidays, with a critical mass of developers reaching the "production patterns" stage of understanding. The repository of 113 patterns represents the accumulated wisdom of teams who've shipped agents to production, providing a map for developers to navigate the territory of agentic AI.

The user optimized the SGP4 propagation algorithm in Zig, achieving 11-13M propagations per second, and created a library called astroz that is faster than other implementations for certain use cases. The library uses SIMD and comptime precomputation to achieve high performance and can be used for tasks such as generating ephemeris data and collision screening snapshots.

SmartOS is a Type 1 Hypervisor platform based on illumos, offering virtualization solutions for OS and hardware virtual machines. It supports live OS booting, increased security, and fast upgrades.

The guide promotes simple, level-headed personal preparedness techniques to cope with life's risks, focusing on everyday scenarios like job loss, water outages, and financial insolvency. It emphasizes the importance of having a rainy-day fund, being mindful of expenses, and avoiding debt to ensure financial continuity.

The user created a tool called box that allows running ad-hoc code on ad-hoc clusters using a direct manipulation API, and used AI to help implement it. The user found that AI was good at producing results but not necessarily good code, and that an incremental approach worked better than trying to write the entire code at once.

We're verifying your browser Website owner? Click here to fix

The user created Muky, a digital app for kids, after their child outgrew the Toniebox and wanted more content options. The app offers a balance between accessibility and parental control, with features like QR code sharing and curated content discovery.

A PCMCIA development board for retro-computing enthusiasts offers audio, networking, and expansion capabilities for vintage laptops and mobile devices. It features open-source firmware, wireless and Bluetooth connectivity, and emulates various audio and storage devices for DOS gaming and other applications.

GenAI tools are increasing human productivity but quietly destroying the ecosystems that made them possible by extracting value from content created by humans without giving back to the creators. A pay-per-use model is proposed as a solution to provide value to content creators, where GenAI tools list sources and offer revenue sharing for used content.

European Commission president Ursula von der Leyen reiterated that Greenland's future is for the Greenlanders to decide, while warning Europe to step up and become more independent in a rapidly changing world order. European leaders are bracing for Donald Trump's address at Davos, where he will discuss his views on the emerging global order, Ukraine, and EU-US trade.

The author visits San Francisco's Haight-Ashbury neighborhood in 1967 to explore the counterculture movement, meeting various young people who have abandoned mainstream society. They witness a mix of free-spirited idealism and chaos, with some individuals struggling with addiction, mental health issues, and the consequences of their actions.

User regretted installing Win11 due to its slowness, bugs, and missing features. They downgraded to Windows 10, wiping their system and reinstalling essential software.

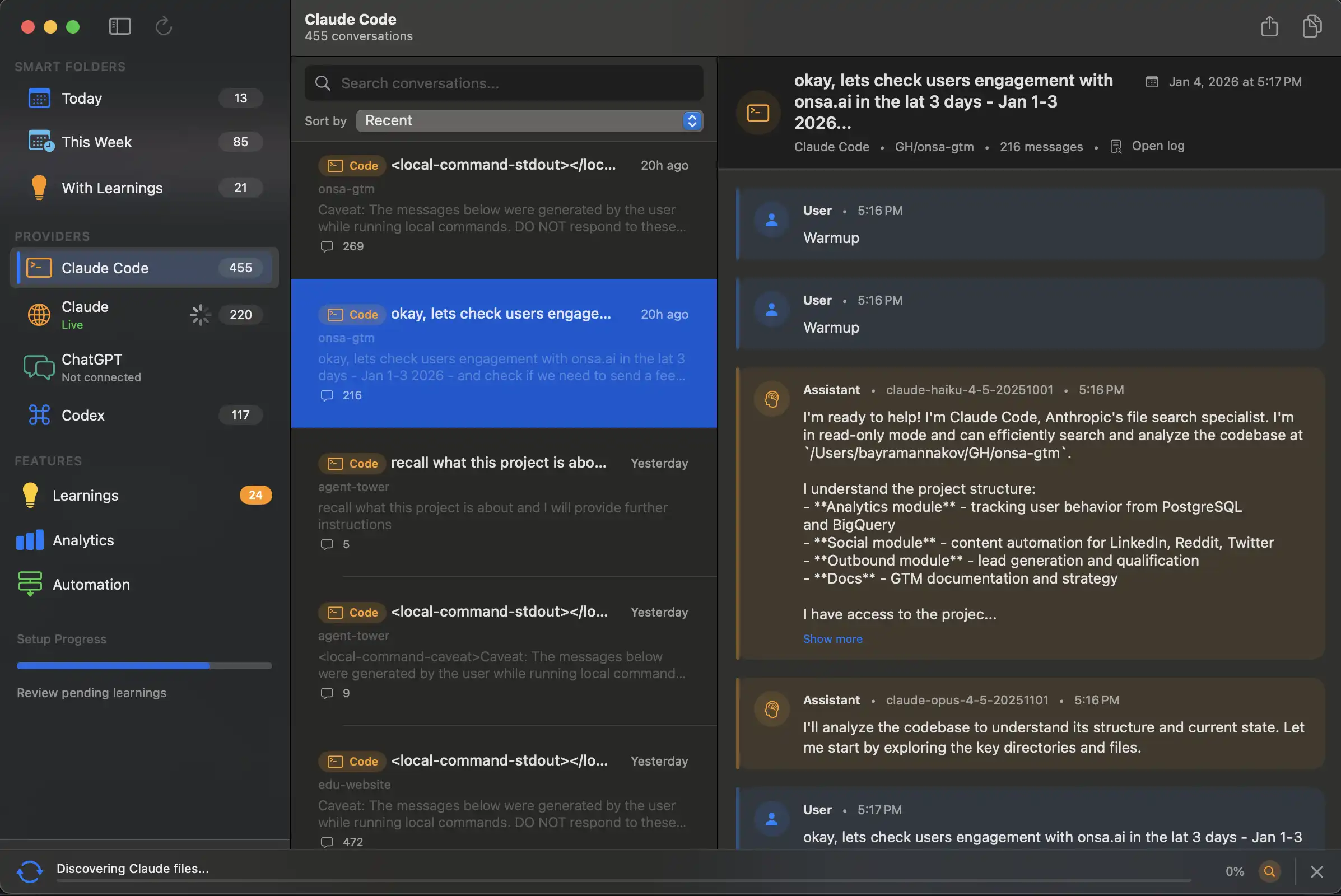

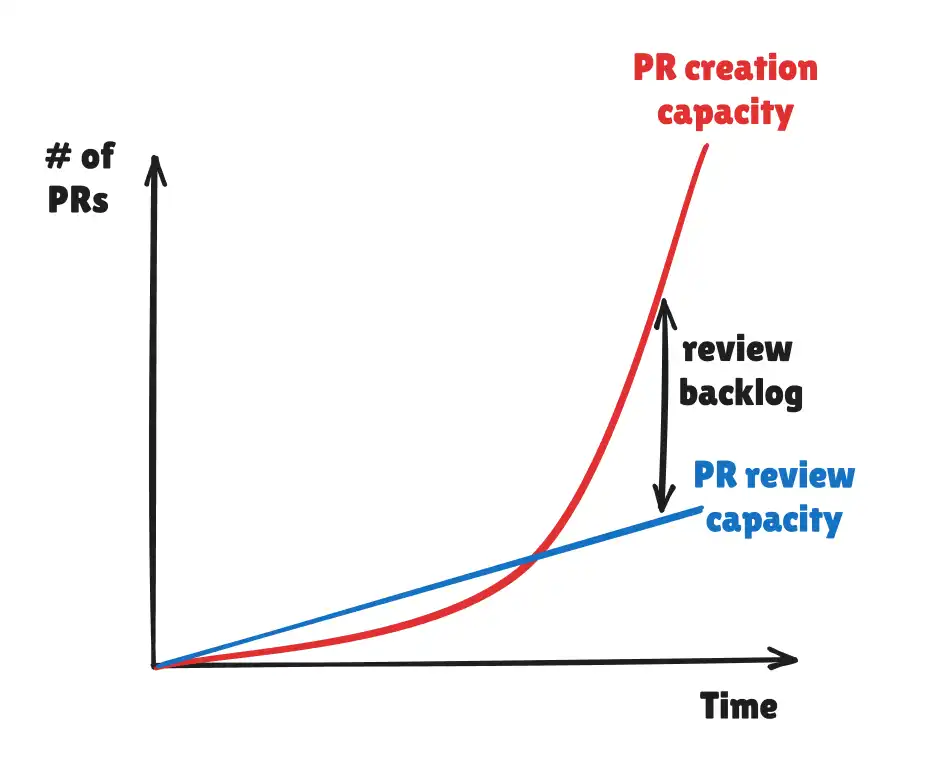

User automates coding work with AI tools like Claude Code and Opus, using them for tedious tasks and high-level guidance. They stay in control of architecture decisions and codebase understanding, treating AI like an intern needing constant feedback and reviews.

Amazon deactivated seller's account due to IP violations despite clear product descriptions. Seller submitted notarized authorization letter but was still unable to reinstate account.

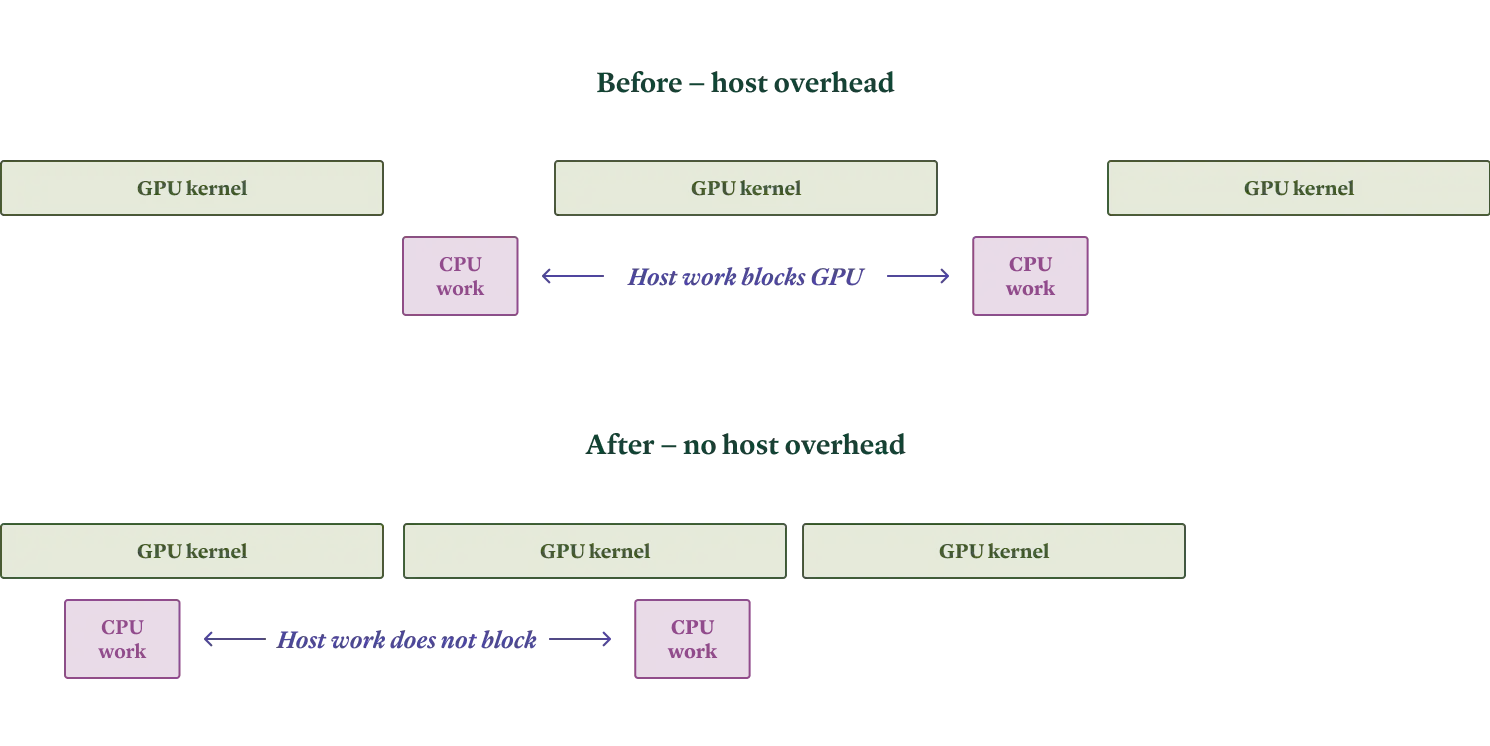

Large language model workloads can be categorized into offline, online, and semi-online types, each requiring different architectures and optimizations to achieve maximum throughput and low latency. The choice of inference engine, such as vLLM or SGLang, and hardware, including GPUs like H100s and H200s, depends on the specific workload and requirements, with considerations for memory ...

StarRocks optimizes joins by using a cost-based optimizer, logical join optimizations, join reordering, and distributed join planning to minimize network cost and leverage parallel execution. The system uses various techniques such as predicate pushdown, equivalence derivation, and join reordering algorithms like Exhaustive and Greedy to generate optimal join plans.

The user set up a cluster of tiny PCs for parallel computing using Ubuntu, passwordless SSH, and R simulations, and compared the performance of CV5 and CV10. They found that tuned xgboost + glmnet had the best results with low bias, low variance, and decent coverage, while gam + lr had high bias and asymmetrical coverage.

Note: These dashboards are not the source of truth. Job posting data can include duplicates and may double count roles. Treat the results as a sample that helps explain what the postings suggest about hiring focus. Summaries are generated with an LLM and may contain errors or omissions. For the most accurate and complete information, research the company directly.

Discover beautiful moments from Studio Ghibli films

Please enable JS and disable any ad blocker

User created sway-layout to automate window layout on Arch Linux with Ansible. It spawns windows at once, tracks processes, and rearranges them, but has limitations and areas for improvement.

Please enable JS and disable any ad blocker

The report isolates the claim that reliability in Amazon shopping flow comes from verification, not from giving the model more pixels or parameters, using a verification layer with explicit assertions over structured snapshots. A strong planner paired with a small local executor, using Sentience for verification, achieves reliable end-to-end behavior with lower token usage and improved economics.

Great news! @mariaingaramo.bsky.social 's company (Nonfiction Labs) made a remote-controlled antibody. Its binding turns on and off with a magnet. This is a HUGE step towards our dream of magnetically controlled drugs. Imagine a cancer drug that ONLY attacks the tumor, not the rest of your body.

A microservice architecture can provide benefits such as lower costs of change, reusability, and maintainability, but it requires careful planning and division of responsibilities among services. To achieve these benefits, services should be designed to have a single responsibility, use technology-agnostic protocols, and be loosely coupled, with a focus on minimizing cohesion and coupling.

ScratchTrack uses Git-style branching for audio production, allowing for experimentation and collaboration without conflicts. It preserves every recording and edit decision, enabling easy reverts and comparisons.

The game "Constellations" involves participants placing hands on the shoulders of those they think fit a given prompt, with the facilitator aiming to balance truthfulness and edginess. As the prompts become more sensitive, participants may prioritize avoiding offense over honesty, but the game can also reveal authentic connections and dynamics within the group.

Git-like automatic versioning with zero cost abstraction. Full history with audit trail. Updates or deletes automatically create new versions. Full history and rollbacks available out of the box. Replay context or debug what went wrong. Long-running agents, MCPs, sub-agents—context fragments fast. One place to read and write, scoped however you need. Framework-agnostic.

Talks will run four hours daily with staggered start times for different time zones. The schedule features a mix of casual and formal presentations for a balanced and diverse program.

Linux is generally considered safe for daily use, especially if you stick to well-maintained distributions, keep your system up to date, and only use software from trusted sources. However, sandboxing and full disk encryption are recommended to enhance security, and users should be cautious when installing third-party software and extensions.

Users can speak naturally at 220+ WPM with AI automatically removing filler words and adding punctuation. SpeechOS transforms text with custom voice commands and editing capabilities.

You're experimenting with PicoFlow, a Python library for building LLM agent workflows with a lightweight DSL. It focuses on async functions, minimal core concepts, and explicit data flow, aiming for a simpler, more Pythonic approach to agent logic composition.

A developer successfully integrated WebView into the Nature programming language, resolving major issues and enabling GUI support, but had to abandon static compilation due to compatibility challenges with WebKit and GTK. The solution involved using the system stack directly as the shared coroutine stack and creating a timer in JavaScript to yield the coroutine scheduler, allowing for a ...